Building Data Warehouse for Report Data

Mar. 24, 2021Objective

The overall objective of this effort is to build reliable and scalable pipeline to enable reporting services and further analytic service on the manufacturing data.This include following the necessary SLA’s on data security and complince to GDPR.The overall steps include but not limited to

- Transport sata from SQL server to object storage with any data loss

- Preserve databackup with Disaster recovery for upto 2 years from their insert date.

- Present the data after applying necessary transformation like contextualization of data

- Serve the data when requested with necessary parameters.

Phases of data transformation pipeline

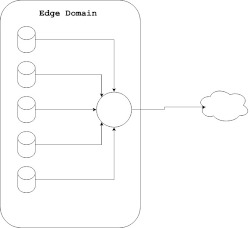

Client uses SQL server as a database for storing the data. Select a appropritate tool for replicating the DML statements and DDL statements in our case this would be HVR. HVR would capture the any changes along with opcode(operation code ie. 0 for insert and 1 for update 2 for delete) in flat file with 0x1 for delimiter and 0x2 for new record as this will avoid confilits with our existing records. These flat files are picked up by simple jave application as bytebuffer files and sent to S3 and serves as a raw data backup without any modification. Then the flat files which serves as a backup are reconstructed into a delta table on top of s3 via a spark application(Replica Loader).

As the replica is reconstructed in Delta lake, we can now apply necessary transformations (Denormalization and contextualization). In contextualization we maily add columns from a lookup table based on the certain timestamp and matching them to the timestamp in fact table. For example in contextualization we look a time stamp in fact_workhistory table and search the crew who is incharge of the table to join them to combine the corresponding table record.The data is stored in cassadra NoSQL database.Then we server the data through a spring mvc api based on two major parameters. The reports are based on the query based on the timestamp window which then are used in dashboards and other reports and anylatics.